A story about building coding agents that refused to follow instructions—and discovered better solutions. Plus, why the rigid world of accounting might need a completely different approach.

The Test That Changed Everything

We were evaluating our complex problem-solving agent with a particularly tricky test case—one of dozens we'd selected to push its limits. The scenario: a React application's tests were mysteriously failing in the CI pipeline but passing locally.

We'd programmed the agent with a standard debugging playbook: Check environment differences → Compare dependencies → Analyze test output → Apply known fixes. Simple, reliable, tested a thousand times.

But the agent did something we didn't expect.

Instead of following our flowchart, it started reasoning through the problem like a senior developer would. It noticed the failures only happened in parallel test runs. Then it spotted that they all involved date-time operations. It dug into the test configuration and discovered that the CI environment was in UTC while local machines used various timezones.

But here's where it got interesting. Rather than just adding timezone mocking (the obvious fix), the agent analyzed the entire codebase and identified that the root cause was inconsistent date handling across multiple components. It proposed a comprehensive solution: implementing a centralized date utility with proper timezone handling, updating all components to use it, and adding timezone-aware tests.

Twenty minutes. Zero human intervention. A solution that not only fixed the immediate issue but prevented an entire class of future bugs.

Here's the thing: We never programmed it to think that holistically.

This wasn't luck. It was the moment we realized our orchestration frameworks—those carefully crafted workflows we'd spent months perfecting—were actually limiting what modern AI could achieve.

Part I: The Prison We Built for Our Agents

For years, we thought we were being smart. Every possible scenario got its own carefully designed path:

We had dozens of these flowcharts. 100s of conditional statements. A pathway for every scenario we could imagine.

The problem? Reality doesn't follow flowcharts.

Users would report issues that didn't fit our categories. Systems would fail in ways we hadn't anticipated. New frameworks would emerge that our workflows didn't recognize. And every time, our response was the same: add more paths, more conditions, more complexity.

Our codebase grew. Our maintenance burden exploded. And our agents kept saying, "Sorry, I don't know how to handle that."

Part II: The Accidental Discovery

The breakthrough came from frustration, not planning.

One of our senior engineers, exhausted from updating workflows, made a radical change. Instead of telling the agent HOW to solve problems, he just gave it tools and context, then asked it to figure things out.

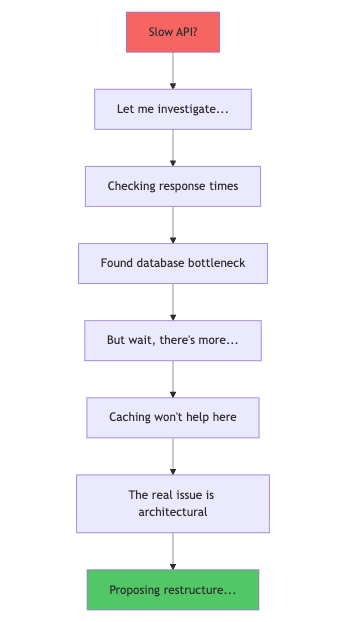

The first test was simple: optimize a slow API endpoint.

The Old Way: The agent would follow our optimization flowchart—check response times, analyze database queries, suggest caching. Predictable, limited, safe.

The New Way: We watched in amazement as the agent's reasoning unfolded:"I notice the API is slow, but it's not just the database. The service is making redundant calls because of how the middleware handles authentication. Also, the data model forces unnecessary joins. Here's a comprehensive solution addressing all three issues..."

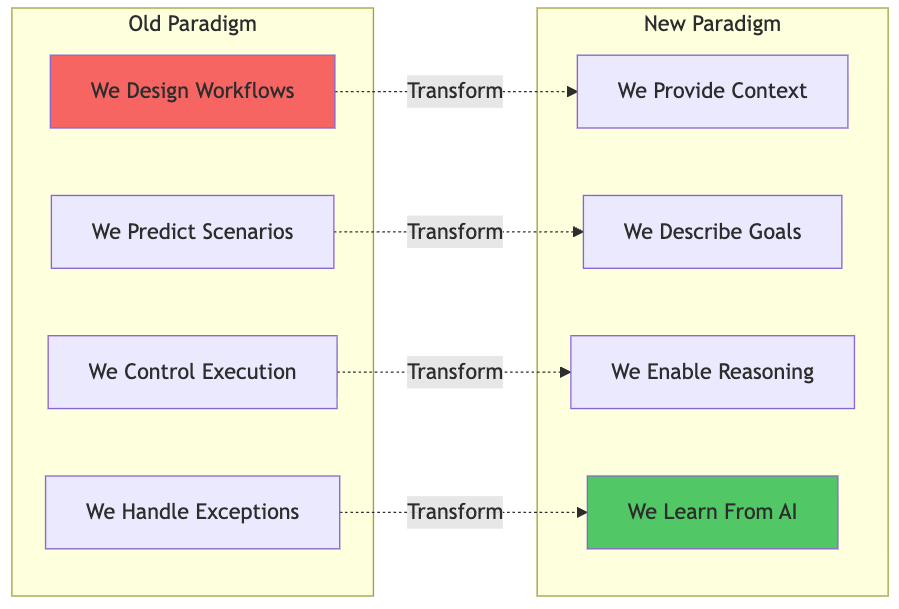

Part III: The Great Paradigm Shift

We realized we'd been thinking about AI agents all wrong:

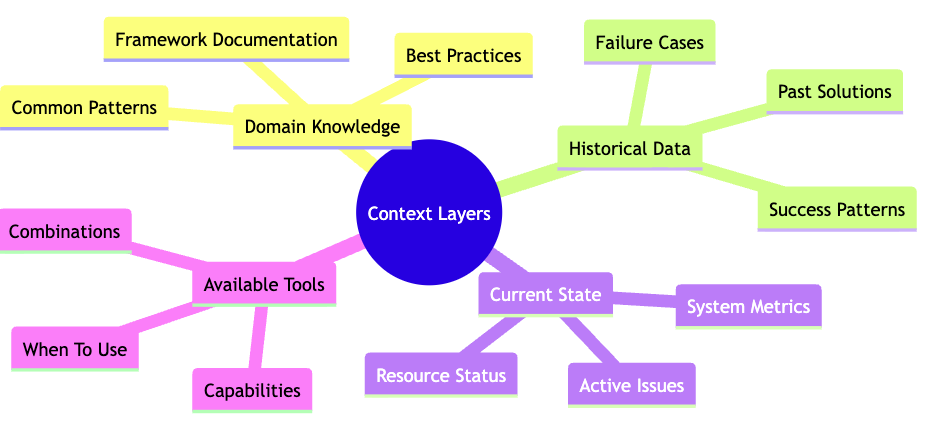

Instead of building elaborate control structures, we started focusing on three things:

1. Rich Context Architecture

2. Clear Objectives (Not Instructions)

Old Way: "First check the database, then analyze queries, then optimize indexes, then test performance."

New Way: "Make this API fast and reliable. Here's what you need to know about our system..."

3. Learning Loops

Every decision, success, and failure became part of the agent's context for future problems. The system got smarter without us writing more code.

Part IV: The Secret Sauce!

So far ... nothing I have written should surprise those who have been building AI agents. However, the advantage of learning through hands-on experience is that you always discover something specific that can give you a temporary competitive advantage in making your agents more useful. Some of them should stay secret and some might not be relevant today after release of some of the latest models. However, if you join our team in FloQast, we can all experiment, discover and learn new secrets together :)

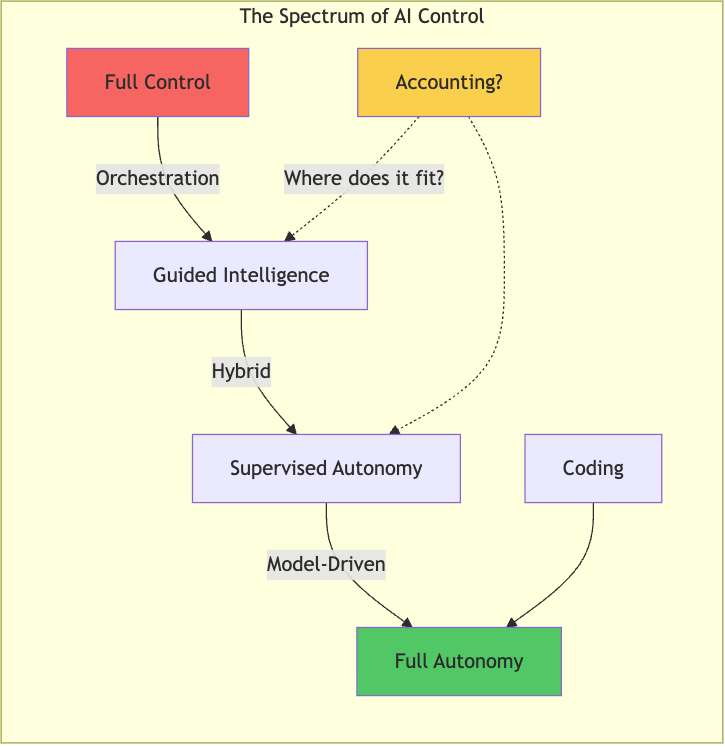

Part V: The Accounting Question

At my new job in FloQast, we started wondering: Could this work for accounting?

At first glance, it seems perfect. Imagine AI agents that could:

- Handle month-end close in hours, not days

- Catch discrepancies humans miss

- Optimize workflows automatically

- Learn from every transaction

But then we looked closer at what makes accounting different:

- Every Penny Must Balance

- Regulations Are Non-Negotiable

- Audit Trails Are Mandatory

- Creativity Can Be Liability

- Errors Have Legal Consequences

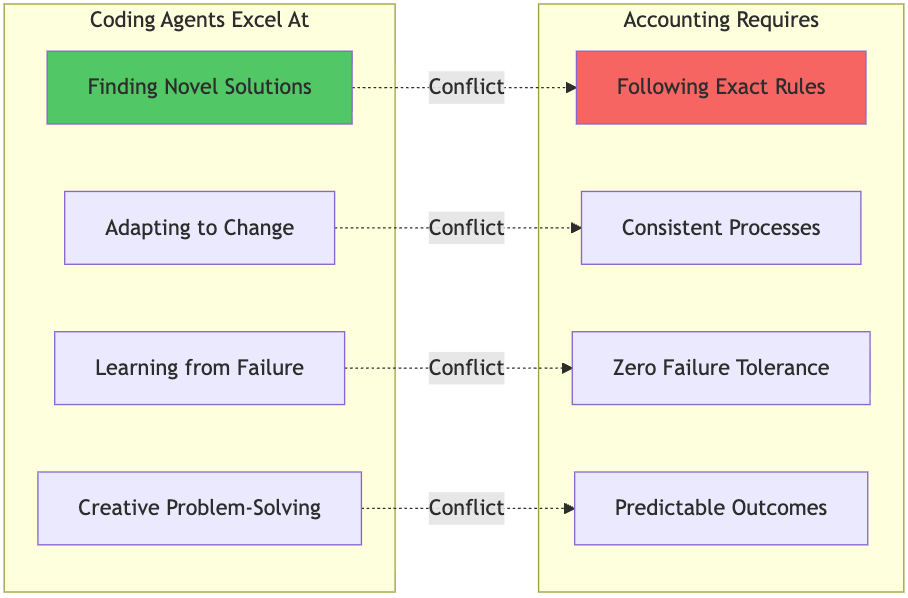

The Fundamental Tension

In coding, when our agent finds a creative solution, we celebrate. In accounting, when an AI "creatively" interprets GAAP guidelines, people go to jail

Looking into the future of AI Agents for Accounting!

Where rigid workflows might actually help:

- Regulatory Compliance: Some processes must follow exact sequences

- Auditability: Every decision needs clear documentation

- Consistency: Same inputs must produce same outputs

- Risk Management: Predictability over innovation

Where intelligence could add value:

- Complex judgment calls (materiality assessments)

- Pattern recognition (fraud detection)

- Exception handling (unusual transactions)

- Process optimization (finding inefficiencies)

While we, at FloQast, have our own hypotheses and experiments to run. I would love to learn how others are thinking of solving these kind of problems. If you are interested in solving these kind of problems, please consider reaching out and explore positions in the Core AI team in San Jose, CA and Pune, India.